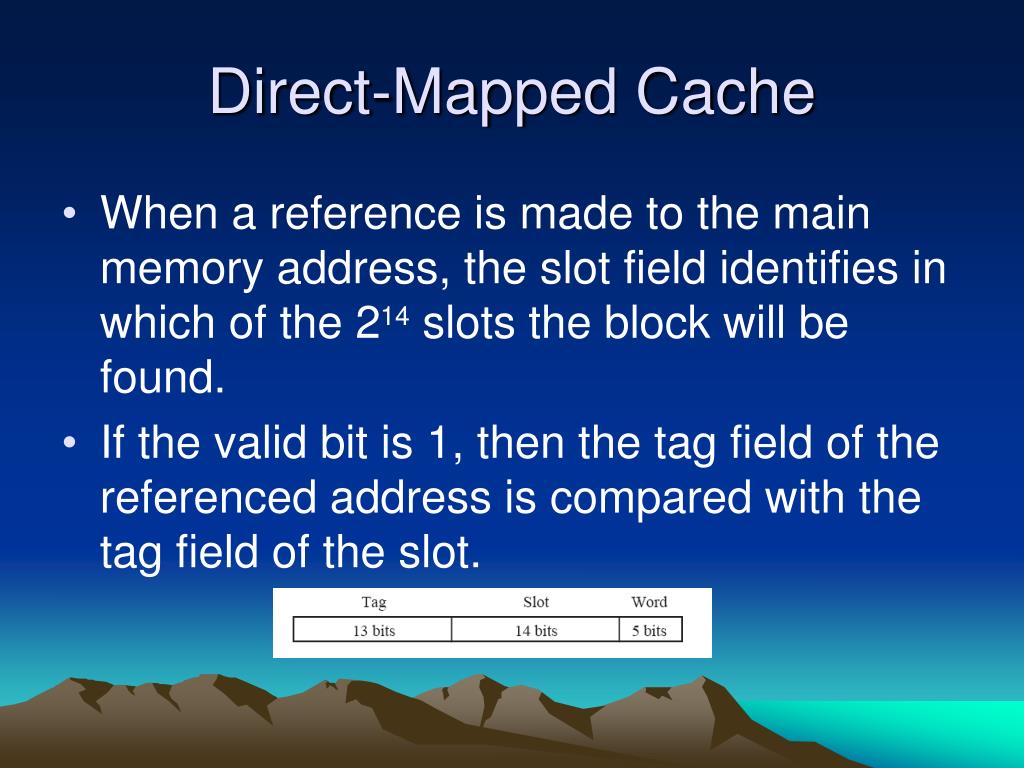

This placement policy is power efficient as it avoids the search through all the cache lines.Else there is a cache miss and the memory block is fetched from the lower memory ( main memory, disk). If the tag matches, then there is a cache hit and the cache block is returned to the processor. The tag bits derived from the memory block address are compared with the tag bits associated with the set.The set is identified by the index bits of the address.

If the cache line is previously occupied, then the new data replaces the memory block in the cache.The memory block is placed in the set identified and the tag is stored in the tag field associated with the set.The set is determined by the index bits derived from the address of the memory block.The cache can be framed as a n × 1 column matrix. Based on the address of the memory block, it can only occupy a single cache line. In a direct-mapped cache structure, the cache is organized into multiple sets with a single cache line per set. Originally this space of cache organizations was described using the term "congruence mapping". There are three different policies available for placement of a memory block in the cache: direct-mapped, fully associative, and set-associative.

In other words, the cache placement policy determines where a particular memory block can be placed when it goes into the cache. A block of memory cannot necessarily be placed randomly in the cache and may be restricted to a single cache line or a set of cache lines by the cache placement policy. There is also a 2015 edition of this course freely available on youtube.Not to be confused with cache replacement policies.Ī CPU cache is a memory which holds the recently utilized data by the processor. In addition to other stuff it contains 3 lectures about memory hierarchy and cache implementations. I would highly recommend a 2011 course by UC Berkeley, "Computer Science 61C", available on Archive. N-way set associative cache pretty much solves the problem of temporal locality and not that complex to be used in practice. The number of "ways" is usually small, for example in Intel Nehalem CPU there are 4-way (L1i), 8-way (L1d, L2) and 16-way (元) sets. Sets are directly mapped, and within itself are fully associative. We are talking about a few dozen entries at most.Įven L1i and L1d caches are bigger and require a combined approach: a cache is divided into sets, and each set consists of "ways".

Usually approximation of LRU ( least recently used) is implemented, but it is also adds additional comparators and transistors into the scheme and of course consumes some time.įully associative caches are practical for small caches (for instance, the TLB caches on some Intel processors are fully associative) but those caches are small, really small. Besides in order to maintain temporal locality, it must have an eviction policy. In order to check if a particular address is in the cache, it has to compare all current entries (the tags to be exact). Even if the cache is big and contains many stale entries, it can't simply evict those, because the position within cache is predetermined by the address.įull Associative Cache is much more complex, and it allows to store an address into any entry. A major drawback when using DM cache is called a conflict miss, when two different addresses correspond to one entry in the cache. Given any address, it is easy to identify the single entry in cache, where it can be. These are two different ways of organizing a cache (another one would be n-way set associative, which combines both, and most often used in real world CPU).ĭirect-Mapped Cache is simplier (requires just one comparator and one multiplexer), as a result is cheaper and works faster. In short you have basically answered your question.

0 kommentar(er)

0 kommentar(er)